I’m currently reading Gillian Tett’s ‘Anthro-Vision: How Anthropology Can Explain Business and Life’.

Her book reminded me of an article I wrote on LinkedIn, and I thought I’d retweak and reshare it here.

Enjoy!

— Jason

In ancient Greece, if you needed to make a big decision or find answers to life's most important questions, such as "Should I have an affair with Helen of Sparta?" or "Should I go rescue Helen?" or "Should I wage war against Troy?", you would go see the Oracle of Delphi.

The oracle was only available once a month for 9 months, so if you wanted to go see her, you'd hike up to the Temple of Apollo on the slopes of Mount Parnassus. You'd then purify yourself in the nearby Castalian Spring, make a sacrificial offering, and then queue up.

Once it was your turn, you'd get on your knees and give the Oracle your question. The Oracle would then go into a trance, and after a few days, she would come out of it and give you her predictions.

From the Oracle of Delphi to the palm readers of Asia to Nostradamus, humans have craved prophecy since the dawn of time. It's deeply embedded in our nature to want to know what's going to happen next because we all want to make the right decision.

What makes the future terrifying is that it is unknown and uncertain. If only we could have a glass ball and make decisions with assurances of the outcome.

The Oracle of Modern Times

Fast forward to 2025, and we have a new oracle. Its name is big data, or "artificial intelligence", or "deep learning", or "Watson", or "ChatGPT".

We climb up to the Temple of Artificial Intelligence and ask the Oracle, "I built a SaaS product, what's the best way for me to distribute this SaaS?" or "How does a blockchain work and how can I make money from it?" or "Forecast the revenue of this new product I created over the next 5 years."

Because of all this uncertainty, our present-day oracle is a $397.27 billion[1] industry.

The Paradox of Big Data: More Information, Fewer Insights

Despite the sheer size of this industry, the returns on impact/investment are surprisingly low. Spending on big data is easy, but using it is hard.

According to Gartner, in 2017, 85% of big data projects failed [2], and I predict (lol) this will only increase with the recent AI boom.

What I've seen both from my time as a poker player and in marketing is that despite having more data systems, people aren't making better decisions, and they're certainly not coming up with more interesting ideas. I find this fascinating.

A few months ago, I was asked by a potential investor, “Are you an opportunist? The reason I ask is because I want to know what motivates you to do what you do?”

The main red thread that has woven itself into the fabric of my career is understanding people.

I want to know why people do what they do and the decisions they make. I like to observe people, culture and society. I like to spot patterns of human behaviour and have models that help predict what people are going to do.

That means I have to ingest and synthesise a lot of data.

Data was how I built a career as a professional poker player. It informed my decisions in high-stakes, high-pressure situations. Data is also how I improve my running and half-marathon training. So it baffles me when I see that data isn't helping people make better decisions.

But what I suspect is really going on is that instead of using data to disprove their hypothesis/thinking, people are using data to confirm their beliefs.

When Big Data Misses the Mark

A perfect example of this comes from Tricia Wang. Wang is a technology ethnographer. In 2009, she was hired for a research position at Nokia. At the time, Nokia was one of the largest mobile phone companies in the world.

Wang had spent a lot of time in China learning about the informal economy. She did things like work as a street vendor selling dumplings, spent nights in internet cafes hanging out with Chinese youths so she could understand how they were using games and mobile phones.

All the qualitative data she was gathering helped her see the big change that was about to happen among low-income Chinese people: Even though they were surrounded by a consortium of adverts for luxury products, the ads that enticed the low-income Chinese people were ads for the iPhone. These ads promised them entry into a better, high-tech future.

Wang also discovered that when she was living in the urban slums, the Chinese people would save over half of their monthly income just to buy a phone.

After years of living like this and doing everything, Wang began to piece the data points together - from the things that seem random, like selling dumplings, to tracking how much they were spending on their phone bill - she was able to create a much more holistic picture of what was about to happen in China. That even the poorest in China would want a smartphone, and they would do almost anything to get their hands on one.

At the time Wang was doing this, the iPhone had just been released. A lot of tech experts, such as TechCrunch writer Seth Porges, looked at the iPhone and said it was just a fad, because who would want to carry around a phone that was heavy, had poor battery life, and broke if you dropped it?

Yet Wang had a lot of data and was confident about her insights that the smartphone industry would take off. She shared her knowledge with Nokia, yet Nokia wasn't convinced because it wasn't big data. They said, "We have millions of data points, and we don't see any indicators of anyone wanting to buy a smartphone, and your data set of 100, as diverse as it is, is too weak for us to even take seriously."

Wang rebutted with:

"Nokia, you're right. Of course you wouldn't see this, because you're sending out surveys assuming that people don't know what a smartphone is, so of course you're not going to get any data back about people wanting to buy a smartphone in two years. Your surveys, your methods have been designed to optimize an existing business model, and I'm looking at these emergent human dynamics that haven't happened yet. We're looking outside of market dynamics so that we can get ahead of it."

Her findings were ignored as she had 'too few data points'. Yet, we all know how that story ended.

This is the cost of missing something if you don't build good, strong, and robust proprietary data.

Nokia's not alone. Lots of organisations, from all shapes and sizes, are skeptical of data that hasn't been spat out from a quantitative model and appears as a number in a spreadsheet.

This isn't big data's fault. This is a human fault. It comes down to the way we use big data to justify our actions, and it's our responsibility to rethink how we use it.

The Limitations of Quantification

Big data's success comes from quantifying very specific environments with stable assumptions, such as shipping container logistics or electrical power grids, and the interaction is highly repeatable. The systems are all neatly contained.

However, this is only a small part of reality. Most systems in life are dynamic, especially systems that involve human beings and behaviour. The forces that act on these systems are so complex and unpredictable, meaning we don't quite know what the cause and effect are, and as a result, these things are very difficult to model.

You see, human behaviour is a complex adaptive system, meaning once you try to predict something about human behaviour, new factors emerge and different conditions unfold. It's a never-ending cycle. Once you think you know something about human behaviour, then something else enters the picture, which can change the meaning of that human behaviour. This is why in behavioural science, understanding context is everything.

The Dangers of Overvaluing Numbers

Relying on big data alone increases the chance you will miss something, while lulling us into this false illusion that we will know everything. We also have to remember that all data exists in the past. It's just a mere snapshot in time.

Big data causes us to have quantification bias - an unconscious belief that what we can measure is more valuable than what we can't measure.

I see this everywhere. In business, in work, in running, in personal development, and so on. It causes you to become so fixated on a number that you can't see anything outside of it, even when there is evidence in front of you proving otherwise.

But I get it. Data and numbers make you feel like everything is under control. But the problem is that quantifying everything is addictive because it can be so tempting to throw out data just because it can't be expressed as a numerical value.

It's very easy to slip into this thinking that math and statistics are the silver bullet to all of our problems. Because this is the greatest problem for any organisation - oftentimes, the future they need to predict to survive - it isn't the needle in the haystack we should be looking for, but the wildfire burning our way outside of the barn.

The greatest risk to any organisation is never the one they can see coming - It's the one they can't see.

When you're blind to the unknown, it causes you to make the wrong decision and miss out on something big. Ask Nokia. Ask Blackberry. Ask Blockbuster.

So how do we avoid this?

To Predict The Future, We Must Look To The Past

The answer lies in the painting of the Oracle of Delphi.

Do you see those people surrounding the Oracle? There's even one guy holding an orange notebook. They are temple guides.

Have you asked yourself what these temple guides are doing?

Well, they work hand in hand with the oracle. When the people of ancient Greece came to ask their questions to the Oracle of Delphi, the temple guides would observe the inquisitor's emotional state and ask follow-up questions, "Why do you want to know the answer to this question?" "What are you going to do with this information?"

The temple guides would take this qualitative information, interpret the oracle's babblings, and present the inquisitor with the answer.

The oracle didn't do it alone, and neither should big data.

I'm not saying that big data can't give invalid predictions. What I'm saying is big data also needs ethnographers, user researchers and people who can gather qualitative data. Tricia Wang calls this "thick data." This is precious data from human anecdotes or emotions, and interactions that cannot be quantified. This is how you can make your data set stronger, reliable and robust.

This thick data is very small in sample size but delivers incredible depth of meaning. Thick data aims to understand what is missing in the models. It grounds business questions in human questions, and why the combination of big and thick data forms a more complete picture.

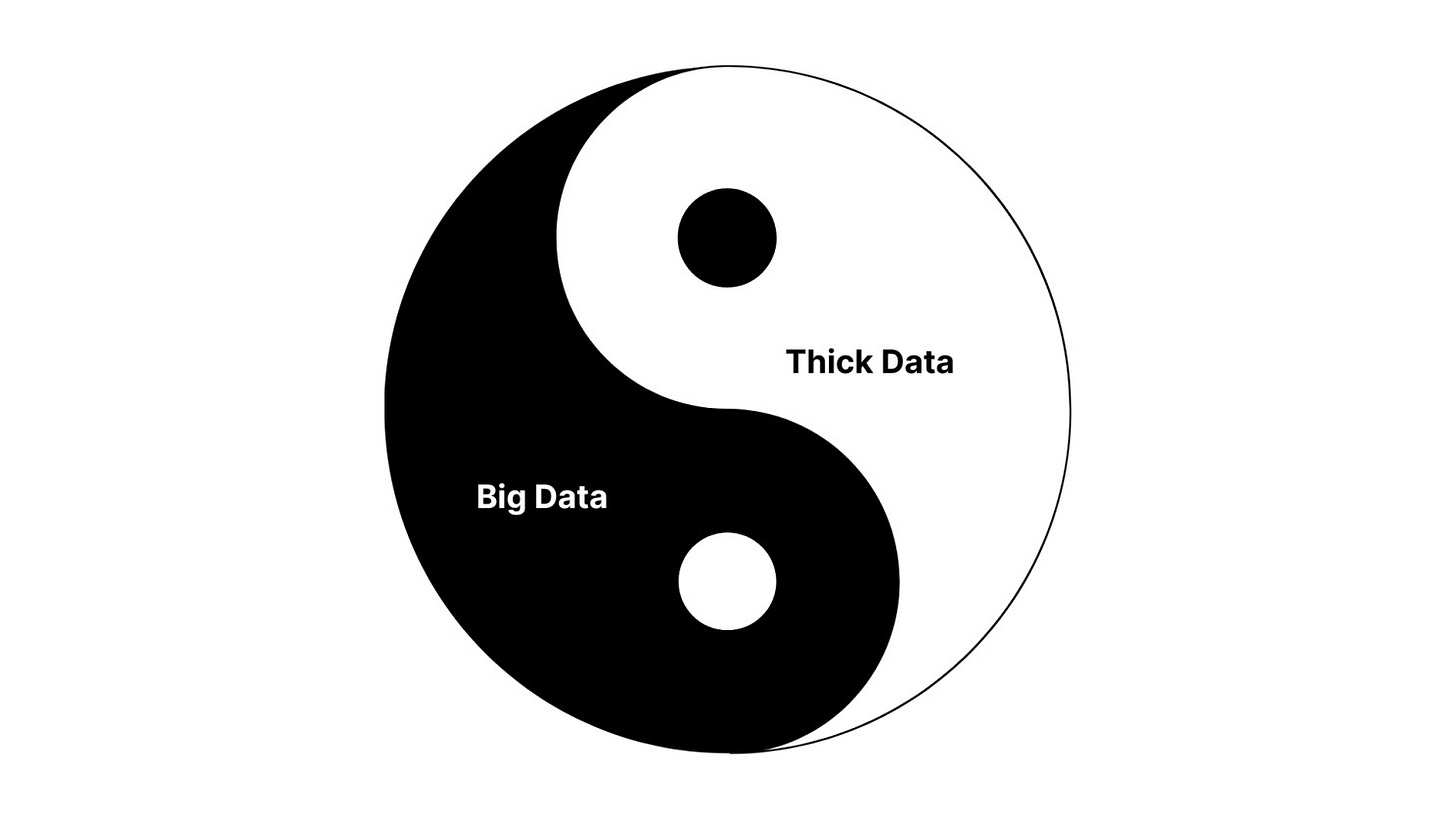

It's not a case of either/or or whether one is better than the other. It's the synergy of both data sets. See it as Yin and Yang. Big data offers insights at scale and leverages the best of machine intelligence. Whereas thick data rescues the context lost, which comes from the abstraction of big data and leverages human intelligence.

An example of this is Netflix. When they started asking, "why is this happening?" they unlocked a new transformation in their business.

Netflix is known for their recommendation algorithm. They had this $1 million prize for anyone who could improve it. But anyone who suggested ideas was only marginally improving the algorithm.

So to find out what was going on, they hired Grant McCracken, an ethnographer, to gather thick data insights.

What McCracken had discovered didn't show up in the quantitative data. He discovered that people loved to binge-watch TV shows. Revealing this insight to Netflix, the Netflix data science team was then able to scale it with their quantitative data. They did something that was simple but highly impactful.

Instead of offering the same show from different genres or more of the different shows from similar users, they offered users more of the same show so that they could continue their binge-watching.

After testing and validating, Netflix redesigned their entire viewing experience to really encourage binge-watching. By integrating big data and thick data, Netflix not only improved their business but also how we consume media.

But this isn't about how fast a stock can grow. It's about using big data more responsibly. As all of our lives become more automated, from our social media algorithms to getting into the lift at work, to pensions, to employment, it's likely that we are all impacted by the quantification bias.

So let's integrate big data with thick data. Whether this happens in companies, nonprofits, government, software, or algorithms, all of it matters because that means we're collectively committed to building better data, making better decisions, and forming better output judgments.

And this is how we will avoid missing the wildfire that burns down outside the barn.

[1]: https://www.fortunebusinessinsights.com/industry-reports/big-data-technology-market-100144

[2]: https://www.datascience-pm.com/project-failures/

Jason Vu Nguyen is the co-founder and CEO of Bloomstory, where he writes the Lindy Effect column – focusing on human behaviour, technology and business.

You can follow him on LinkedIn.

At Bloomstory, we help brand, product and marketing teams test whether their content, messaging or product works by simulating how real customers would react, using AI-powered focus groups.

We are looking for CMOs and Founders to take a sneak peek at our MVP and give their thoughts. If this is you, please reach out to us as we’d love to talk.

Tired of planning for AI adoption but going nowhere? We also do AI training and consulting👇