I Read All The Research On Synthetic Personas

An exploration into the pros and cons of synthetic personas

I was a professional poker player for 11 years.

After playing my last hand in August 2021, I worried my career at the tables had left me unprepared for the business and marketing world.

But 4 years later and with a bit of perspective, I now see a different story. Those years in poker effectively handed me a master’s degree in behavioural science and AI.

Today, I channel that knowledge into the small startup I co-founded to help businesses understand why customers buy and predict what they’ll do next.

Poker has a long history as a research topic. Starting in 1944, mathematicians and the founding fathers of game theory, John von Neumann and Oskar Morgenstern, used mathematical models to analyse simplified poker games.

The biggest breakthrough came in the last 2 years of my career when Carnegie Mellon teamed up with Meta to build a poker AI bot named Pluribus.

By simulating lots of hand scenarios, Pluribus became the first AI capable of beating the top professional players in poker. This was a big milestone in AI research because poker was considered the holy grail for AI to solve due to the complexity, variance and players involved.

Knowing all of this and seeing how large language models (LLMs) like ChatGPT disrupt industries, there’s an interesting application of AI in marketing and business I see unfolding in front of me – synthetic personas.

Synthetic personas are AI-generated representations that mimic human behaviors and interactions.

By extending this synthetic idea to marketing, I can’t help but wonder what if we could test 100 marketing campaigns in the time it takes to run one focus group? Or what if we could send out a marketing email to 100 AIs first, to catch any mistakes before you roll it out to your 50,000 person email list?

Whether it’s advertising, website copy, or social posts, there are real-world consequences to getting your messaging wrong, so why not practice on a synthetic focus group first?

Large language models (LLMs) are good at roleplaying, and if you get enough of them together, each with different personas, you can simulate how the market would react to your business or marketing idea, potentially mitigating the risks.

The creation of synthetic personas and placing them in a simulation (a defined digital world where multiple AI agents interact to model complex behaviours or social interactions) is not an old idea. If you think about it, the characters in The Sims are synthetic personas inside a simulation but lack autonomy.

We simulate as many things as we can: stock market, wind tunnels, earthquakes, chess, protein unfolding and so on. We do this because simulating is a way for us to quickly learn what works and what doesn’t.

Even in poker, I used game theory solver tools to simulate what was the most optimal solution to play a hand.

However, I do acknowledge that synthetic personas is a contentious subject, and it may even invoke ethical concerns from you, such as misrepresentation, bias, stereotyping risk, and a potential for manipulation of data to produce desired outcomes.

But I hope to address these concerns and help you make sense of the current state of synthetic users, perhaps even convince you that using synthetic personas can be productive given the right caveats are in place.

A Better Strategic Starting Point

Whilst simulating can help us learn faster and provide a somewhat risk-free environment for testing, it doesn’t fully capture the complexity of real-life situations.

Which leads me on to my next point: You should absolutely not be trying to replace real humans with synthetic humans.

There is no substitute for meeting people face-to-face, listening with an open mind, studying context, and, above all, noting what people do not say, as much as what they do talk about.

Behaviour never occurs in a vacuum. It’s why the infamous prisoner's dilemma is impractical. It exists in a context-free, theoretical universe with no real-life parallels and lacks the richness that characterises real human interactions.

When it comes to reality, context is everything. It guides our decisions. This is why the rational attempt to devise a universal context-free law for human behaviour is futile.

However, there are a few scenarios where a synthetic persona makes sense – focus groups, need identification, concept testing, hypothesis generation and growth exploration.

I see synthetic personas similar to the game theory tools I used in poker – it gives you a better starting point to make your business decisions.

And that is what we at Bloomstory try to do with AI. While multimodal LLMs cannot capture all this relevant nuance and detail, they give us a more precise and dynamic starting point to model human behaviour.

A Study in Simulation

So what does academic research say? What do we know?

I want to share what I think are the most important research papers around the synthetic persona space.

The three big papers that made a big splash around 2022-2023 were these three:

Tl;dr: LLMs can mirror human preferences in some contexts but struggle to generalise human behaviour across diverse populations.

Large Language Models as Simulated Economic Agents: What Can We Learn from Homo Silicus? – John J. Horton

Tl;dr: GPT-based models can replicate aspects of human economic decision making but raise concerns over bias and reproducibility.

Tl;dr: Turing Experiments (TEs) reveal that AI models consistently distort real-world human behaviour in simulations.

They all show that there's a lot of promise in this idea of using language models to simulate agents that mimic human subjects.

Then, in 2023, came the most cited paper to date on this topic:

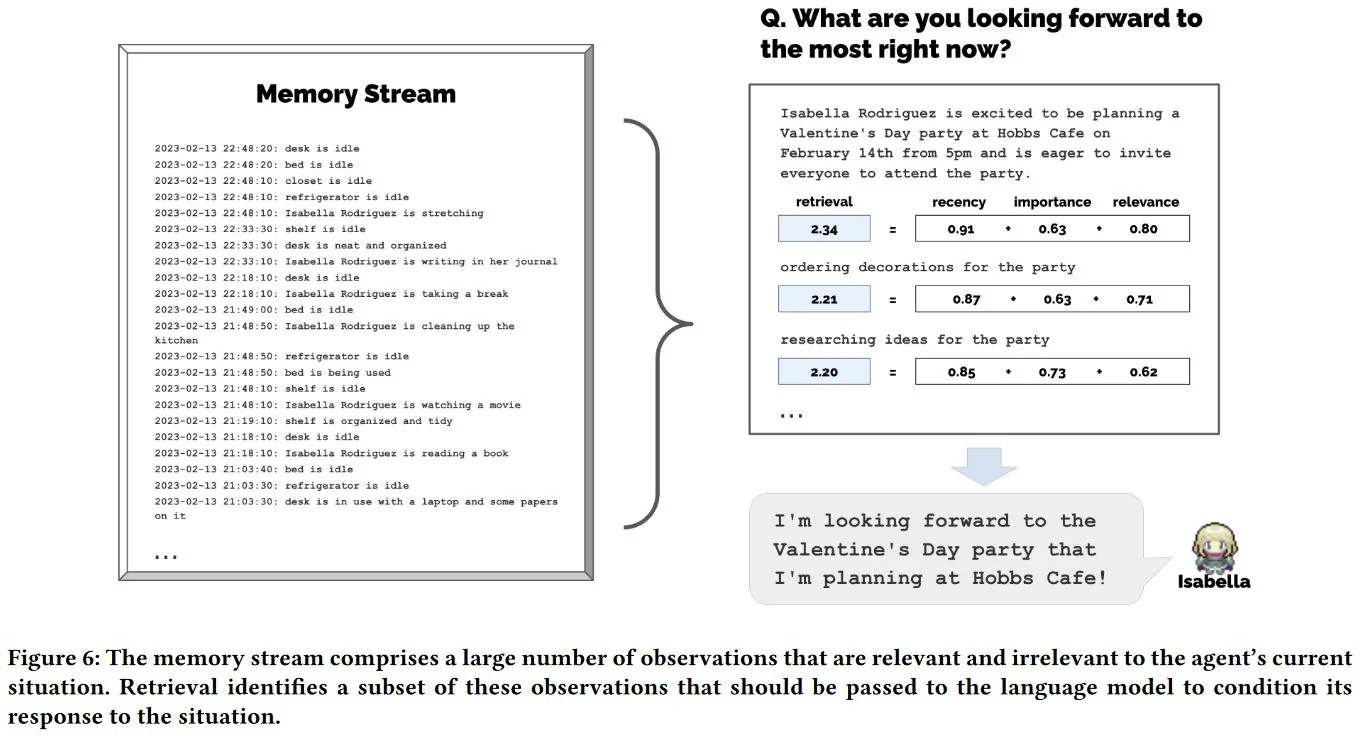

In this paper, the researchers built a world for the AI agents, and they simulated interactions in digital spaces to see what the agents would do.

What the researchers found were some similarities between real human behaviour and the AI agents.

Tl;dr:

Built AI-driven agents using LLMs

Simulated interactions in digital spaces, including social and economic behaviours

Measured how closely agent behaviour mirrored real human patterns

Agents were able to cooperate, negotiate, self-organise and exhibit behaviours like forming groups or reacting to environmental changes

This could be useful for testing interventions before real-world deployment

Then, last year, on the 15th of November 2024, another paper was published that caused a big reaction:

In this paper, the researchers interviewed over 1,000 participants in a two hour interview.

Researchers then created a synthetic version of each participant from the transcript. Researchers then had both the real participant and their synthetic counterparts perform various tasks and answer questions.

The results of the study showed that the AI agents achieved an 85% replication rate as their human counterpart. This level of accuracy demonstrates AI’s ability to capture nuanced personality traits and decision-making patterns.

Obviously, this is not the same as putting an agent out in the real world where they have to interact in an open system. All of the studies have deployed AI agents in closed systems, but the results of their research seem promising.

And then last month, we had a really interesting paper published called:

The paper looked at comparing human and synthetic responses in surveys on sustainability, financial literacy, and labor force participation.

The researchers tested responses across the world, using GPT-4 based synthetic users.

The short takeaway is that AI closely matched human responses but diverged in non-WEIRD countries.

Overall, the results are quite interesting.

However, is getting 85% accuracy based on GPT driven personas good enough or can we do better?

In my experience with risk management and decision making, 85% accuracy doesn’t matter if the other 15% is catastrophic (this is related to the concept of ergodicity, but more on this topic another time).

In our pursuit to de-risk the remaining 15% and drive up the accuracy and robustness, Bloomstory has been feeding other data into the LLM to create a more rich and complex portrait for our synthetic persona.

We augment the synthetic personas by feeding in behavioural, psychological, emotional, linguistic and cultural data. This is so that the synthetic personas aren’t merely stereotypical but mirror real people in the real world as closely as possible.

The Limitations

As amazing as technology and machines are, they’re not the silver bullet to all of our answers.

It’s tempting to ask ChatGPT to solve all of our work problems, to calculate who is right in an argument, to make decisions for us instead of spending the time to think through the problem ourselves.

But you must remember that ChatGPT and other LLMs are by nature sycophantic.

It will tell you exactly what you want to hear, and if you’re not careful, you will end up stuck in an echo chamber.

Synthetic personas are clusters of patterns. Whatever bias in the data you feed in, it will also spit out. Garbage in, garbage out, as they say.

At the individual level, people, humans, and consumers are very hard to predict. Synthetic personas only work at the macro/collective level.

But what about the other limitations?

Instability in decision making: LLMs are probabilistic in nature. That means there will be a different output every time, even with identical task structures.

Prompt brittleness: Small changes in the wording of a prompt can significantly alter the output.

Memorisation vs Understanding: LLMs don’t understand, they just memorise and predict the most plausible answer.

Fine-tuning and RAG limitations: There has been evidence to suggest that even with training on specific behavioural datasets, LLMs struggle to consistently mimic human-like responses.

Confidently nonsensical: AI can construct narratives and information that sounds confident and coherent but isn’t.

Lack of lived experience: We can inject the synthetic personas with all the data we want but real people bring a vast and rich store of context, emotion, personal history and lived experience. AI may be able to simulate happiness, but it will never feel it.

There are many ways to combat these limitations, such as fine-tuning an LLM, cleaning data and mitigating bias, controlling the temperature values for variance, and so on.

But we can’t offset everything to AI. It’s vital we keep ‘humans in the loop’.

Like all tools, the value depends entirely on the skill of the person using them. A good researcher knows that it isn’t a way to replace other intellectual tools but to complement them.

Just as adding salt to food binds the ingredients and enhances the flavour, adding synthetics to the marketing research process creates a deeper and richer analysis.

A Peek Around Corners

Eleven years at the poker table have taught me that predictive models are powerful, but understanding their limitations is essential. The same principle applies to synthetic personas.

My co-founder, Vanessa, and I see the convergence of AI, behavioural science, and simulations as a way to hold up a mirror and ‘peek around the corner’ into possible futures for solving your business problems.

From testing market reactions, exploring consumer needs, finding new areas of growth and aligning brand messaging.

All of this reduces uncertainty and provides a competitive edge to businesses.

Another way to think about what Bloomstory is doing is akin to a pilot stepping into a flight simulator for marketing and business strategy: it’s safer and cheaper to crash in a simulation than in reality, and every run teaches you something new.

And so, if this article has piqued your curiosity, I invite you to bring your marketing challenges to our table, where we'll help you read the room before you place your bets.

Jason Vu Nguyen is the co-founder and CEO of Bloomstory, where he writes the Lindy Effect column – focusing on human behaviour, technology and business.

You can follow him on LinkedIn.

At Bloomstory, we help businesses turn conversations into campaign insights.

We are also building a synthetic persona tool for founders, marketers, and strategists. It’s currently in development, but sign up to our newsletter to stay up to date on our progress.

We do AI training, adoption and innovation for marketing folks in companies. Deciding what tools to use can be overwhelming and a waste of money. Work with us to bring the most effective AI tools to your organisation so you can do more with less.